AI Search Is Broken

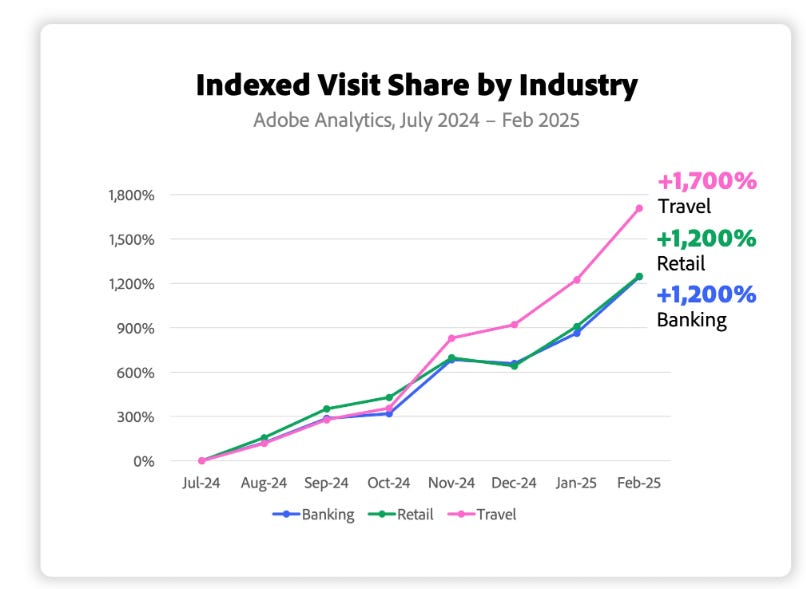

The use of AI search tools is up 1,300%. The only problem? They don't really work.

AI search tools are having a moment.

Their usage is up 1,300%, and they’re everywhere. OpenAI’s ChatGPT rolled out its Search tool late last year. Anthropic’s Claude introduced its version a few weeks ago, joining a crowded market with OG AI search tools like Perplexity, Google’s Gemini, and Microsoft Copilot.

According to new research from Adobe, consumers are using AI search tools for everything from research (55%) to product recommendations (47%) to online shopping (39%). In tech circles, it's impossible to go five minutes at a happy hour without hearing someone exclaim, "I don't even use Google anymore!"

And listen, It’s easy to see the appeal of these tools. Google has been ruined by the Content Flywheel from Hell, in which "growth hackers" churn out AI-generated for the sole purpose of tricking another AI into ranking it highly. It’s incredibly appealing to think that AI can just do the Googling for us and find the information we’re looking for from reputable sources.

The only problem? AI search tools don’t really work. They hallucinate at a higher rate than Adam Neumann at Burning Man. And most people have no clue.

AI Search still gets it wrong most of the time

In February, the BBC studied the accuracy of four popular AI search tools (ChatGPT Search, Perplexity, Gemini, and Microsoft CoPilot) and found that 51% of AI Search inquiries came back with significant issues, and 91% had at least some problems.

The issues were what we’re used to seeing from for AI chatbots — introducing factual errors, altering quotes, or just making up things that people didn’t say. You know, casual things like claiming that people are running Hamas who are definitely NOT running Hamas.

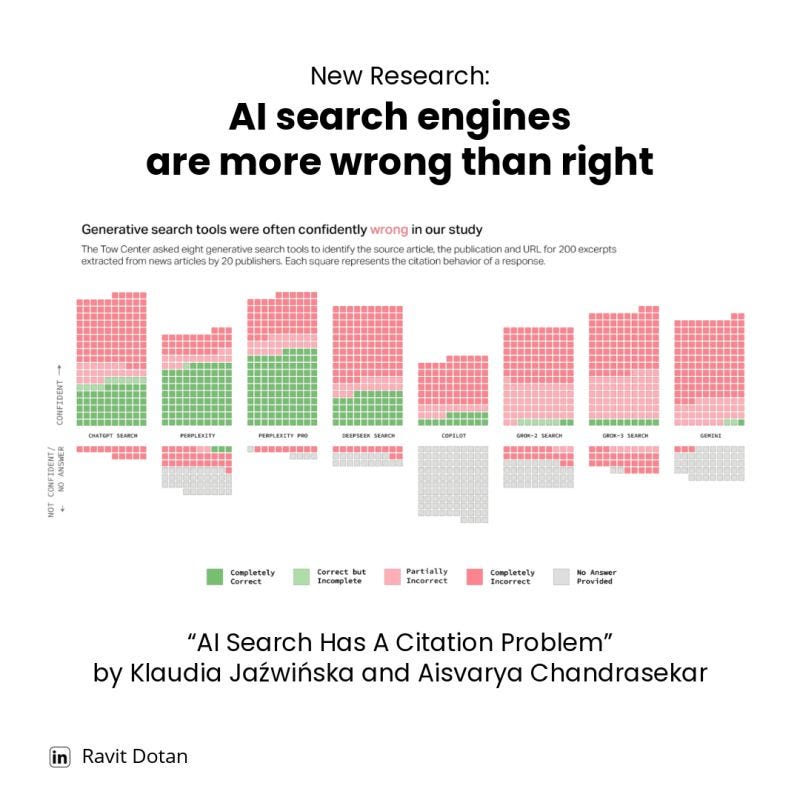

In another study last month, two researchers from the Tow Center of Journalism at the University of Columbia, Klaudia Jaźwińska and Aisvarya Chandrasekar, found that AI search tools made errors in most instances.

The researchers’ test was simple: They gave eight prominent search tools an excerpt from an article and asked them to provide the article title, date of publication, publication name, and URL. The test was designed to be easy for the AI; in each of the 1,600 tests they ran, the correct article appeared within the first three results if you just put it in Google.

The AI search tools got it wrong 60% of the time.

Perplexity performed best, only getting it wrong 37% of the time. Grok-3 Search had an error 94% of the time. (Way to go, Elon!) Even more alarming is that the models were incredibly confident while giving the wrong answers. What if you used the paid versions of Perplexity and Grok 3, you might ask? The problem gets even worse: the models would be more likely to be overconfident when giving a wrong AND more likely to give you a wrong answer in the first place.

The research initiative also revealed that most of these tools are violating paywalls and do-not-crawl directives from publishers (fun!). They were able to return correct results from content they weren’t supposed to be able to access — showing that our AI overlords still do not give a fuck when it comes to scraping our content without permission or compensation.

I could go on — these are just two of many studies that show AI Search is deeply flawed. Although, apparently, that’s not going to keep investors from giving Perplexity $1 billion at an $18 billion valuation.

And it won’t keep Google from going all-in on AI Search with AI Mode (video from me on that below!), even if its AI recommends that people put glue on their pizza.

Broken by design

I write this newsletter as someone who would really like these tools to work. I basically just Google shit for a living — at any given moment, I’m either writing this newsletter or my new book or consulting for tech clients, all of which involves a ton of research.

As a result, I’ve been an early adopter of AI search tool, even though I know they’re kind of fucked. I pay $200/month for OpenAI’s Deep Research tool — its most advanced form of AI search — and had Perplexity Pro before I realized it was worthless. I also have the paid version of Claude. Despite hallucinating wildly, these paid tools are still worth it for me because:

A) It’s my job to be on the cutting edge of this stuff and …

B) I do so much research that they still save me time, even though I need to double-check every source. It’s like having the fastest, hardest-working intern on earth … who is perpetually on acid. ChatGPT Deep Research’s analysis is often marvelous, sometimes non-sensical, and occasionally comes back to my desk with a starry glimmer in its full-moon pupils and a Word document filled with gibberish. At $200/month, I’ll take it.

My concern isn’t that these tools don’t provide any value; it’s that most people aren’t aware of their limitations because our tech overlords advertise them as being far more popular than they really are. Some of the most tech-savvy people I know are surprised to learn that Generative AI tools still hallucinate one out of every five times on average. As a result, they fail to double-check the information that these generative AI tools give them, which can pretty easily lead to some bad mistakes.

That makes these tools dangerous as they become more popular, and it’s unclear if this issue will ever go away. It’s been 30 months since ChatGPT came out, and the hallucinations remain.

According to leading AI researchers, that’s a flaw in their design — they’re built to please and would rather present a wrong answer than say, “I don’t know.” As José Hernández-Orallo, a professor at Spain’s Valencian Research Institute for Artificial Intelligence, told The Wall Street Journal, "The original reason why they hallucinate is because if you don’t guess anything, you don’t have any chance of succeeding."

If you use these tools, exercise caution. Validate any information they give you with the original source before you use it in your work. And for the love of god, do NOT put any Elmer’s on your pepperoni.

Marketing advice of the week

“Either AI will work for you or you’ll work for AI.” — Seth Godin

Godin joined my CMO meetup last Friday for an AMA and dropped this line, which I think is a much more accurate twist on the “AI won’t replace you — a human using AI will replace you” adage we see plastered all over LinkedIn.

We’re past the point where you can opt out of AI, even if you hate it on ethical grounds. The only move is to figure out where these tools can be helpful and treat them like part of your team, whether it’s:

Automating the bullshit busywork that fills up your team’s day (notetaking, landing page copy, technical SEO, etc.)

Getting in-depth insights into a new industry or stress-testing product positioning (20% will be hallucination issues, but the 80% that’s correct is hugely valuable and will save you a ton of time.

Serving as a brainstorming partner. Godin thinks every CMO should spend an hour asking Claude to interrogate their strategic decisions. His favorite prompt? Give it your strategy, and ask Claude to ask you 40 hard questions about it.

AI isn’t helpful for everything, and there are places where using it can be straight-up harmful. (More on that below.) But you need to be in a power position where AI is working for you — not the other way around.

Content advice of the week

“AI shouldn’t be the posts, they should power the posts.” — Rachel Karten

If you’re not subscribed to Rachel Karten’s excellent social media Substack, Link in Bio, you should be. I loved her piece this week on how we’re likely to see more and more brands stand out on social with “proof of reality” posts that show they don’t use AI — particularly now that ChatGPT’s native image generation tool can create images imperceptible from reality. I loved her analysis of why some brands should reject AI:

To explain why brands will want to show “proof of reality”, it’s important to explain why brands might not want to be associated with AI creative in the first place.

Brands that use AI images or videos on social—whether perceivable or not—are making a statement to the consumer about craft. If your brand cares about how your product is made, then it would seem incongruous to use AI to mimic the style of a maker.

If you — or your brand — care about craft, then use AI to storyboard and sell the big idea. But don’t use it to replace the magic of human collaboration.

Tool of the Week

(This section is NOT sponsored, although if someone offered me enough money, I’d probably sell out and sponsor it.)

Excalidraw: My friend Luke turned me onto this tool this week, and I used it to create the Content Flywheel from Hell graphic above in about 10 minutes. It’s the best whiteboard. illustration tool I’ve ever tried, and I’m going to abuse the hell out of it for newsletter and social graphics.

if you liked this post, you’ll also like:

How AI Nearly Tricked Me Into a Career-Ending Mistake

Why the Hell Is OpenAI Training a Creepy Creative Writing Model?

AI and the Science of Creativity

Thanks for reading! If we haven’t met before, I’m Joe Lazer. I’m a journalist turned marketer — I spent eight years building Contently while leading marketing and content strategy before leading marketing at an AI company called A.Team. I wrote a best-selling book on the art and science of storytelling called The Storytelling Edge, and have the last few years writing this newsletter to show marketers and creatives how to create content that stands out in the AI Age. Subscribe to get fresh analysis and frameworks in your inbox each week.

"They hallucinate at a higher rate than Adam Neumann at Burning Man"

Wow terrifying! I use gpt - I always ask for sources in my prompts / that seems to help a bit but the hallucination is still crazy!