How Writers Can Win the AI Content Wars — Before It’s Too Late

Tech companies want to sway us towards apathy about AI's use in content creation. We can start to fight back with one simple new norm.

I’ve been thinking a lot about this new Fiverr ad, a bizarre piece of corporate wish fulfillment with the refrain that “Nobody cares if you use AI.”

On the surface, it’s just making fun of people on LinkedIn who post incessantly about their ChatGPT hacks. But just below the surface, it has a deeply nihilistic message: You shouldn’t care if content is made by AI. And if you do, you’re nobody. Your opinion doesn’t matter.

It’s just one ad, but inside the tech world, the sentiment is everywhere. And for those of us who care about the value of human creativity, it’s a dangerous message we need to guard against.

Do people care if you use AI?

Do people care if you use AI to create content? I spend a lot of time researching and writing about AI and the future of work, and I can tell you that, yes, they certainly do:

62% of consumers are less likely to engage with or trust content if they know AI created it.

90% want transparency on whether AI created an image.

71% say they’re worried about being able to trust what they see or hear because of AI.

83% say it should be required by law to label AI-generated content.

I could go on. Just 10% of people say they’re more excited than concerned about AI, while most are more concerned than excited. This data surprises many people I know! There’s a massive gap between how people in tech and advertising view AI compared to average consumers. For instance, a Yahoo and Publicis survey found that while 77% of advertisers view AI favorably, just 38% of consumers say the same.

But Fiverr — a platform founded on the ethos that creative work is worth less than a Crunchwrap Supreme — would like it if nobody cared. So would the executives Fiverr is targeting with this ad. If your audience doesn’t care whether content is created by AI, that means execs can fire 90% of their content team and buy bulk packages of cheap, ChatGPT-penned SEO and LinkedIn slop from Fiverr instead. Do more with less, amirite?

Fiverr’s ad is a form of corporate wish fulfillment; it tells Fiverr’s target audience (marketing execs, CEOs) what they want to hear while also swaying a skeptical public towards AI apathy.

For creatives, this is how the AI content wars will be lost — not with a bang but a hand wave. The biggest risk to writers isn’t AI itself — it’s that people will stop valuing human craft, creativity, and artistic choices. The Fiverr ad is just the beginning. In the coming years, the corporate world is going to spend a lot of money convincing people they shouldn’t care if content is made by AI. It’s time we fought back.

How to win the AI content wars

I’m not dogmatically anti-AI. I’ve spent the past three years working with a future-of-work-focused AI startup. I incorporate AI into my creative process. I’ll talk out the issues I’m having with my book with Claude over a late-night drink.

I’ve also been exposed to how many tech leaders and corporations talk about generative AI when reporters aren't present. While they may give lip service to the value of human creativity, it’s in their best financial interest if the general public becomes apathetic about how AI is used.

This is how you get OpenAI’s (now ex) CTO saying, “Some creative jobs will be replaced, but maybe they shouldn’t have been there in the first place,” and then being forced to backtrack.

We’re in a precarious moment. Two years post-ChatGPT, we haven’t established any cultural or ethical norms about using generative AI in content creation. There’s no clear consensus of right and wrong, and without one, our corporate overlords will take advantage of the ambiguity to devalue creative work.

It’s time we fixed that. And by we, I mean you and me. Not the platforms.

Almost every day, I see another “Note” on Substack pleading with the platform to update its terms of service to ban the use of generative AI without disclosure. But if we wait for the platforms to create new AI norms, we’ll be waiting forever. We need to make them ourselves.

Ethical norms aren’t bestowed from the heavens. They’re fluid. Plagiarism, for instance, wasn’t a serious infraction until the 18th century — which is why both Shakespeare and Leonardo da Vinci “borrowed” way more from other artists than we’d be comfortable with today. The Renaissance brought an emphasis on individualism and individual genius. Writers and academics wanted to protect their ideas and the profits that came from them. So, they exerted their influence by making copying without attribution a taboo practice.

So, what should our new norms be about generative AI? I don’t have all the answers, but I have one suggestion to get us started.

A new norm that’ll help us start to win the AI Wars

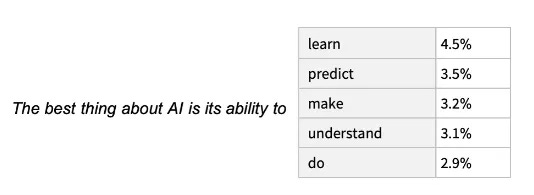

Generative AI is complicated because it doesn’t clearly violate an existing norm. Frontier models like ChatGPT and Claude rarely plagiarize because they don’t ingest creative works wholesale. Instead, they use them as training data to help them predict the next word in a string of text. (Stephen Wolfram has a must-read primer on the inner workings of generative AI.)

Sure, I find it incredibly irritating that ChatGPT has been trained on my last book — after all, it was copyrighted, no one asked me for permission, and I didn’t see a single cent of compensation! But it’s a legal gray area that may fall under Fair Use. ChatGPT can impersonate my writing, and while that impersonation is QUITE insulting — like looking at yourself in a funhouse mirror — it’s unlikely to directly plagiarize my work.

The best comparison to using GenAI might be ghostwriting, which is also mired by ethical murkiness since many vain CEOs don’t disclose when they use a ghostwriter on a book. For some reason, that’s considered acceptable. But no self-respecting writer would ever use someone else’s words without disclosure or citing their sources, so I’m proposing one simple, new norm:

New Norm: Disclose how you used Generative AI in every post.

Maybe you used GenAI as part of your research process, as I did with this post. Maybe you asked for feedback after you finished a first draft. Maybe you rewrote a paragraph with Claude’s help. Maybe it wrote an entire section, and then you edited it. I’m not calling for us to judge one another — I’m just asking for transparency. A little section at the bottom of each post that discloses how you used it. I'm calling on both independent writers as well as content teams at brands to pick up this practice.

If we start a grassroots movement of voluntary disclosure, I believe we’ll accomplish a few things:

We’ll learn from each other.

Contrary to what Fiverr thinks, I’m very interested in how other writers are using AI, but it often feels like we’re terrified of talking about it.

I’ll start: I never let AI write for me, but I use Perplexity and Claude for research (while being sure to fact-check for hallucinations). I’ll often talk through ideas with Claude like I would with an editor. I use Opus for social video editing. Occasionally, I’ll use Claude to brainstorm a pop culture reference or sharpen a metaphor.

Do any of these practices violate the trust or expectations of my readers? I don’t think so, but I’d like to know if they do! That’s why I’m advocating for the disclosure of GenAI usage, even if you just use it for research or feedback and don’t use any GenAI-generated text. Let’s learn from one another and figure out where the line should be.

We’ll build trust with readers.

Psychologically, self-disclosure is one of the most effective ways to build trust with others, which seems particularly true with AI; in one study, disclosing the use of AI in ads boosted trust in the brand by 96%. There’s power in transparency.

Want your content to stand out? Want to gain a leg up over your competition — whether that's a competitive company or another newsletter in your space? This is a way to do that!

We’ll create a new norm that will spawn others.

If disclosing your AI usage becomes the new normal among writers, we won’t need platform policy changes. (Although that’d be nice.) It’ll just become the expectation, like citing sources. I also think that this transparency will help us reach a consensus on other norms around generative AI as we start a conversation about where we should draw the line.

We’ll distinguish and increase the value of human-generated content

This is the big one—the reason why I believe voluntary disclosure can be the first step for writers in winning the AI content wars. The internet sucks right now, and that means we have an opportunity to build something new and better.

Social media is in decay. Facebook has now been overrun by AI-generated shrimp Jesus posts that millions of people are worshipping — a true Kurt Vonnegut dystopia come to life. X has become a cesspool of white nationalist eels. Mark Zuckerberg has plans to flood Instagram with creepy AI-generated content. TikTok is openly trying to become QVC with their shop feature as influencers shamelessly hawk crappy products for a taste of late-capitalist glory. LinkedIn cuts to the chase and actively begs users to post AI-generated broems.

Across platforms, AI seems set to drown a flailing internet, flooding Google with SEO spam and Instagram, Facebook, and TikTok with deepfakes. Even Amazon is swamped with thousands of AI-generated books every day that rip off the work of established authors.

Gen Alpha and Gen Z are increasingly quitting social media, and most people feel like the state of social media has decayed. Through all of this, I’ve developed a sort of anarchist optimism. What if AI breaks everything, and something better emerges in its place?

I believe we’re about to see the internet split in two. On one side, the AI-infested legacy social platforms of today. On the other side, a renaissance of niche, small, personality-driven media brands that people flock to because they are distinctly human — run by real people readers can trust.

Platforms like Substack are providing the infrastructure for these new media brands, and as writers, one of the best ways to signal to readers that we are human is to disclose how we use generative AI (if at all). After all, the AI slop farms on the dark side of the internet aren’t going to do the same. Again, this doesn’t mean you’re not allowed to use generative AI. It just means you’re transparent with your audience about how you used it.

All it takes is a little disclosure.

At the risk of sounding like a thirsty YouTuber, I’d love to hear what you think in the comments. Do you use AI in your creative process? Where do you draw the line? What other new norms can we set? Do you think my proposal is completely idiotic? It’s okay if you do! We don’t need to agree — we just need to stand up and show that we care.

How I used GenAI while writing this newsletter

I used Claude as a starting point in researching the history of plagiarism.

I used Perplexity to find additional stats on how consumers feel about AI usage in content.

I use Grammarly as a copy editor but don’t use the GenAI features because they’re incredibly annoying. Do I want to make this sound more positive?! Absolutely not. Please stop asking.

Recommended Reads

The State of Culture, 2024 (Ted Gioia): You may have read this already, but I just did, and damn — it nails our dopamine-addled world.

What is ChatGPT doing and why does it work? (Stephen Wolfgram) : Even if you hate GenAI, know thy enemy. This overview is simply excellent.

You’ll Grow Out of It (Jessi Klein): The most underrated book of humor essays of all time and the perfect holiday gift for anyone who can read.

I’m the best-selling author of The Storytelling Edge and a storytelling nerd. Subscribe to this newsletter to get storytelling and audience-building strategies in your inbox each week.

This is a great article. Thank you. I support your disclosure idea to take the initiative and show some transparency. I use very little AI generated content in my work, but knowing how others use it is helpful and makes me see how I could use it to support my process as a creative.

Great post!! It clarifies my questions in many ways!!!